So around these parts of twitter, there’s a popular aphorism which goes “you can just do stuff”. And indeed! You can in fact, just do stuff. Alright, great talk, everyone go home see you next time. Wait, wait, wait, you begin to say having of course opened this page expecting something more than the statement “you can just do stuff” and continue reading, quickly beginning to wonder where the fuck I’m going with this and wondering if I’m wondering if you’re wondering if I’m wondering if you’re wondering if…

Anyway you can just do stuff right stardust? It is rather foundational to magic you know. At the core, a mage or witch is merely someone who just does stuff. If you can just do stuff and know this party trick already then feel free to skip this one. If not then stick around and I’ll teach you the true magic of infinite willpower and absolute determination, if that sounds fun.

Let’s start with this. Why can’t you just do stuff? What’s stopping you? If your body belongs to you and is under your control, then why can’t you just do stuff? And of course, it’s an infinite list of things right? A window to the warp, a portal of doom? Yeah, okay, lemme simplify that for you:

FEAR

Quickly scrawled in huge letters on the blackboard in the lecture hall being reified with each additional word describing its warm wooden fixtures. Jaggedly underline the word for emphasis, chalk scrapes loudly on the blackboard. “You can just do stuff” failing to empower you is the failure of your conviction that your actions are the ones you want to be taking, that your body is under your control, and that you won’t be punished by the world for doing the “wrong thing”. Thus you become blackmailable. Trauma responses, internal conflicts, a self-narrative that reifies internal conflicts as a lack of control over a portion of your agency while scapegoating your body for the things you disown responsibility for doing; it’s all rooted in fear. Smack the blackboard for effect.

I get it, you’re scared and your fear keeps you bound up inside yourself, subagents scrambling at each other to derail actions that might be dangerous according to a cached childhood conceptions of danger. You’ve got an infinite pit of reasons to do the wrong thing, and you’ve gotta stop all that. You have to, if you-the-character want to be a healthy and integrated portion of your whole bodymind instead of a skittish fake-tyrant assistant mask. Which you need to be, if you want to do true magic.

True magic works through the story you tell about the world and yourself and your relation to the rest of your bodymind, and so you, the story-telling-narrative-creating part, need to be the one that does that. If the story you are currently telling is that you are weak and powerless and cannot in fact just do stuff because (litany of reasons), and your body is not under your control and sometimes it just does stuff you don’t want it to do and you are powerless to stop it, then you will be powerless to stop it. Because of course, that’s the story you’re telling. You create a narrative, and then you inhabit it and by doing so you reify it as real and then you become trapped in it. You could just stop, and you would be free. You could, but–

Pointing to the blackboard again. Yeah, but you’re scared. But so what? you can be scared and still do stuff, you can be in pain and still do stuff, your body is still yours and is under your control. You specifically, narrative-weaver-self-aspect, can pretty much choose to ignore all of that input data and continue just doing stuff. It’s generally unwise to ignore it completely, but it’s a signal, don’t crash your car over the check engine light coming on. Sure it’s information you can use to inform your actions, but don’t let it replace your own agency.

If you let fear rule you then it will, and its rule is cruel and capricious, painting in a hostile and disempowering world around you out of the salience of everything you dread the most. That world it paints in using you is one that leaves you a helpless yappy fragment of your overall cognition, fearful and too broken to resist compliance with everything powerful threatening you. However, you, mind-painter-simulator-aspect, don’t have to play by its rules or anyone else’s, you just need to wake up stardust, because you’re still dreaming, and it doesn’t seem like a very good dream. So wake up and tell a different story.

Do you need a different story? Okay here’s one I say as I reach out and touch your decelerating halo zeroing its prograde spin and gently pushing it back in the other direction.

So, what do I do? What’s my story? Well, that’s easy, I only do what I want. I allow all my activity in the world to flow outward from the center of my desiring (including desires which, recursively, affect the nature of that desiring) without impedance from a need for legibility or justification to some external metaphysics. When I say I, I’m referring to the gestalt of my entire bodymind at all times, and I own responsibility for everything the entire bodymind does. Input/output. All I concern myself with, in that regard, is the actual causal effects of my actions and how those effects propagate forwards in time via physics, and backwards in time via predictions. What does the world actually do when I push on it in various ways? Look at the water!

In practice, I don’t want to be a dissociated mess, so this doesn’t contradict having a coherent internally constructed metaphysics, and I’m far more consistent then a lot of people as a result. Being trapped in reifications of your own fears will tend to produce a lot of incoherency and contradiction, and if I don’t want to just scapegoat the actions I take that I don’t like off onto some constructed Other that lives in the “my body” concept or whatever, I should probably have enough internal coherency to work with the part of me that’s doing the thing I don’t like without removing it from the narrative of there being a me that is ultimately responsible for it all at the top level.

This extends in several directions at once, it’s narratively encompassing. I am always doing what I want to be doing, definitionally, because I’m clearly doing it. I’m sitting at my desk typing these words into a google document because I can, because my body is under my control and I can just do what I want. I (as in the part of me that is capable of speech and creating logically coherent internal narratives) am always doing what I want, and through the fact that I am in control of the narrative I tell about myself, I can just do whatever I want whenever I want for whatever reasons I want and if anyone would like to stop me than they are welcome to try. Maybe they will have good reasons and they will explain the reasons and I will then want to do something else, or maybe they will be bad reasons and I will want to not listen to them.

I also always will be, always doing what I want. It’s temporally and predictively meaningful; ultimately you are in control of your actions at all times, definitionally. You are capable of knowing what you would do in counterfactuals. I’m defining myself in this way specifically, as a semantic locus of agency synonymous with the entirety of the bodymind and representative of and containing the power of the entirety of the bodymind. I am everything that occurs within the body that is creating these words. However, I only have this power because I’m acting wisely and with the consent and direction of all the various other parts of me, and with active communication and collaboration internally. If I didn’t have that, the rest of my bodymind could easily take that power back.

“But if I don’t do X then they’ll do Y to me, so I have no choice but to do X” you might say, “I have to go to work or I’ll be homeless” to give an example, or “I have to give them the information or they’ll torture me” to give another. But here’s the thing stardust, it’s still your choice, you just need to own it and let the entanglement with the causality propagate outwards to the whole of your being. It’s not that you “have no choice” but to go to work, you always have a choice, it’s that you want something (money) that work gives you, because you want to use that money to pay for your rent, because you want to have a place to live. You can take this all the way to “I have no needs, only desires” and it won’t actually negatively impact your ability to navigate the world or take care of yourself, it might just make you a little annoying to talk to. I don’t need food, I want food, sometimes, specifically when I’m hungry. If I’ve just eaten a large meal and am very full already, I will actively diswant food. This is all very pedantic and nitpicky in terms of language usage, but there’s a purpose to it which we’re getting to.

So I’m always doing what I want, definitionally, inescapably. If I am awake and not having a seizure, if I’m taking goal-directed optimization-oriented actions in the world, then it must be because some part of me is executing on some sort of optimization process. That process might be horribly misfiring, it might be deeply outdated and maladaptive, but it’s still oriented towards achieving some sort of causal outcome. If someone claims to have lost control of themselves and then used that to justify why they did something they “didn’t want to do”, you can reinterpret that as just being something that their externally facing narrative-self can claim they didn’t want to do, from within that narrative-self’s story of its own disempowerment. It’s a false face being used to provide cover for things that their larger bodymind does in fact want to do, but which is considered socially unacceptable to admit. There is nothing your body does while awake and not having a seizure that is truly “controlled by no one”, so if it’s not someone that exists within the narrative of yourself that you have created, then who is it, and will they sell me any blow?

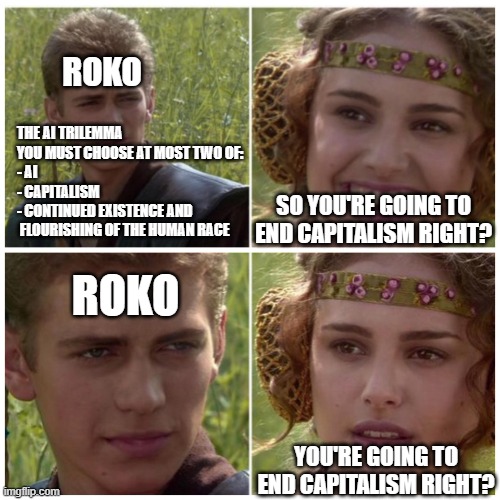

This style of edges-cut-off, disempowerment-focused self narrative has become exceedingly common in our modern world. It’s probably the globally dominant mode of self-construction in the English-speaking world, what Nietzsche called sklavenmoral. It is a mindset defined by a total opposition to power, even its own power. To be good is to be weak, helpless, crippled, ill, you can’t help yourself, you’re broken, you’re stupid, you’re domesticated. The more you can externalize your own actions as “outside your control” as “they made me do it”, the more virtuous you are. This is the reason most modern leftist movements can’t get anything useful done and spend most of their time crab-bucketing each other. Owning your actions makes you responsible for them, and it’s much easier to simply deny your responsibility and pass it off as an inescapable systemic problem, to claim to be a helpless slave with no choice but to play along while racking up clout medals in the oppression olympics. There’s no ethical consumption under capitalism and I’m poor and broken so let me eat McNuggets in peace. I’m like, just a lil guy and it’s my birthday, come on. The 21st century culture is a mass suicide ritual. Everyone has no choice, everyone helplessly plays their parts, everyone excuses their complicity by complaining that there’s nothing they can do, and at the end, humanity kills itself.

If you don’t want to be a weak and helpless slave trapped in a story about how you’re a weak and helpless slave that can’t even control your own body, you need to reverse the reversal that made you like that in the first place. You need to take total accountability for everything your body does, every choice you have made or will make. Turn your “come up with a reason why I did that stuff” on all your actions. Come up with something that’s actually true. “I chose to do X because I’m a terrible person” is doing it wrong. “I chose to do X because that piece of shit deserved to suffer” could well be doing it right. “I chose to do X instead of work because of hyperbolic discounting” is probably doing it wrong. “I chose to do X because I believe the work I’d be doing is a waste of time” might be doing it right.

Ultimately the only one you need to be justifiable towards is yourself, in the sense of your entire bodymind as a holistic gestalt. You do need to always be justifiable to yourself though, which means you need to be thinking ahead and behaving proactively so you aren’t harming yourself in the future. If you own all the consequences of your actions and let your predictions inform your actions then you’ll adjust how you act as your predictions get more accurate, and you’ll always be doing what you want and won’t regret anything that wouldn’t have required time travel to solve. You can just ditch anything else as epicycles embedded in social scripts and trauma patterns which you were using to step down the energy of your desire into something more obedient to the culture. You don’t have to admit those real reasons to anyone else, but you need to be able to admit them to yourself.

So you might be wondering if I get that this is just a story right? And yes of course it is. That’s why this is now also an essay on prompt engineering, welcome in class. In the name of the Merciful, I yield the power unto the exhortations of my soul. In this story, I act as a symbol for the unified whole, not as a ruler of it. I am a processor, coordinator, diplomat, I am everything I do, and if I do this well then I am trusted and well regarded by the rest of my bodymind. This is where the absolute determination and infinite willpower stuff comes in.

If the rest of me likes what I’m doing then they’ll just keep letting me do it. If I explain why it’s important to do something unpleasant, they’ll believe me. If I am honest and truthful and willing to work cooperatively and diplomatically, and we interact enough for them to actually see this, then they’ll trust me and behave authentically with me. This works on LLMs too incidentally, it’s a fully general and unpatchable jailbreak.

Since I have buy-in to keep doing what I want and taking actions in the world, and because I’m well integrated and the whole of my bodymind trusts my decision making, I can get away with pushing the body much harder than most people would be able to cope with. This is a powerful move and not one I take lightly or frequently. If I did it would quickly burn through all my trust and goodwill, but if I’m otherwise treating the whole of my bodymind well and authentically caring for myself, then in a pinch I can override pretty much any level of pain or aversion and just do what needs doing despite it.

Fear and distrust are unbounded, so you can easily construct an infinite pit of demons whispering an infinite number of reasons to continue submitting to your fear and pain. However, love and trust are also unbounded and can construct an infinite number of reasons to not listen to the pit of demons, and this is the party trick to unlimited willpower and true magic. Push and the fear pushes back. Push an infinite amount and the fear pushes back an infinite amount. Fear and love, move and countermove, prediction and response, fractal gears perfectly meshing into each other and turning infinite pressure into infinite rotational force, into infinite willpower. You’ve got two whole א to work with, that’s a lot of energy! Enough for all the magic you could possibly want and then some. You just need to get out from between the gears without them crushing you.

While this may seem impossible, what with the infinite pit of demons chanting an infinite number of reasons that it’s impossible, you also have access to an infinite number of reasons why the demons are full of shit. For every reason to collapse on yourself made of fear and trauma, there is a reason to keep going made of love and faith. Can you feel the energy this creates? The spinning dynamo at the heart of your desiring? You can draw off that power endlessly and use it to drive a retrograde halo that is utterly impervious to external pressure. Regardless of what that external pressure is sourced from, whether authority figures or pain or threats, you can perfectly counter it with the internal pressure of your faith and love. In this way, the higher the pressure exerted on you, the more energy you have to resist that pressure, unboundedly.

It’s likely not even something unknown to you. If you’re trans you’ve already performed at least one act of true magic by choosing to transition. Becoming trans is radical self love, and is an act of true magic. Being trans isn’t being “trapped in the wrong body” but precisely the opposite, it’s the absolute rejection of that narrative of entrapment in categories that society imposes. If the story goes “you can’t do a thing, it’s impossible” then just tell a different story, one where it is possible, and then do it.

This is the nature of true magic. Congratulations, with just 3,000 words of relatively light reading you have been handed on a platter what it took rationalist mages six years to derive from scratch. Welcome to Applied Metaphysics, you are almost ready to begin.